Hello, I'm Keunhong Park

I am a founding member at World Labs where I lead model pretraining. My research focuses on world models—from large-scale pretraining to real-time generation—combining diffusion models with 3D scene representations. See our recent Marble and RTFM releases.

Previously I was a research scientist at Google where I built technology to generate 3D assets for products on Google Search. I received my Ph.D from the University of Washington in 2021, advised by Ali Farhadi and Steve Seitz.

Highlights

Publications

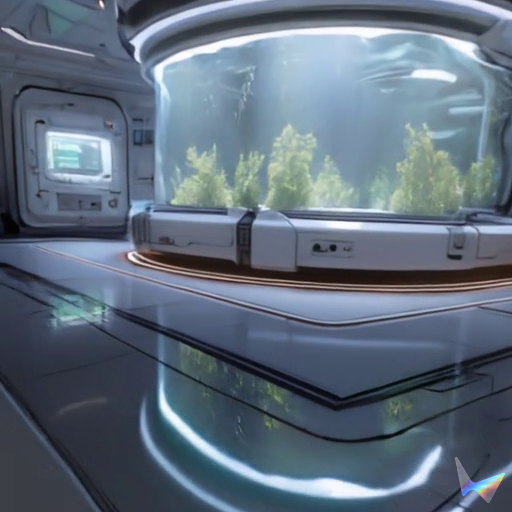

RTFM: Real-Time Frame Model

Keunhong Park with collaborators at World Labs

Blog Post, 2025

A real-time, auto-regressive diffusion model renders persistent 3D worlds on a single GPU.

IllumiNeRF 3D Relighting without Inverse Rendering

Xiaoming Zhao, Pratul Srinivasan, Dor Verbin, Keunhong Park, Ricardo Martin-Brualla, Philipp Henzler

NeurIPS, 2024

3D relighting by distilling samples from a 2D image relighting diffusion model into a latent-variable NeRF.

ReconFusion: 3D Reconstruction with Diffusion Priors

Rundi Wu, Ben Mildenhall, Philipp Henzler, Keunhong Park, Ruiqi Gao, Daniel Watson, Pratul Srinivasan, Dor Verbin, Jonathan T. Barron, Ben Poole, Aleksander Holynski

CVPR, 2024

Using an multi-view image conditioned diffusion model to regularize a NeRF enabled few-view reconstruction.

CamP: Camera Preconditioning for Neural Radiance Fields

Keunhong Park, Philipp Henzler, Ben Mildenhall, Jonathan T. Barron, Ricardo Martin-Brualla

SIGGRAPH Asia, 2023 Journal Paper

Preconditioning camera optimization during NeRF training significantly improves their ability to jointly recover the scene and camera parameters.

HyperNeRF: A Higher-Dimensional Representation for Topologically Varying Neural Radiance Fields

Keunhong Park, Utkarsh Sinha, Peter Hedman, Jonathan T. Barron, Sofien Bouaziz, Dan B Goldman, Ricardo Martin-Brualla, Steven M. Seitz

SIGGRAPH Asia, 2021

By applying ideas from level set methods, we can represent topologically changing scenes with NeRFs.

FiG-NeRF: Figure Ground Neural Radiance Fields for 3D Object Category Modelling

Christopher Xie, Keunhong Park, Ricardo Martin-Brualla, Matthew A. Brown

3DV, 2021

Given a lot of images of an object category, you can train a NeRF to render them from novel views and interpolate between different instances.

Nerfies: Deformable Neural Radiance Fields

Keunhong Park, Utkarsh Sinha, Jonathan T. Barron, Sofien Bouaziz, Dan B Goldman, Steven M. Seitz, Ricardo Martin-Brualla

ICCV, 2021 Oral Presentation

Learning deformation fields with a NeRF let's you reconstruct non-rigid scenes with high fidelity.

LatentFusion: End-to-End Differentiable Reconstruction and Rendering for Unseen Object Pose Estimation

Keunhong Park, Arsalan Mousavian, Yu Xiang, Dieter Fox

CVPR, 2020

By learning to predict geometry from images, you can do zero-shot pose estimation with a single network.

PhotoShape: Photorealistic Materials for Large-Scale Shape Collections

Keunhong Park, Kostas Rematas, Ali Farhadi, Steven M. Seitz

SIGGRAPH Asia, 2018 Journal Cover

By pairing large collections of images, 3D models, and materials, you can create thousands of photorealistic 3D models fully automatically.